About, Disclaimers, Contacts

"JVM Anatomy Quarks" is the on-going mini-post series, where every post is describing some elementary piece of knowledge about JVM. The name underlines the fact that the single post cannot be taken in isolation, and most pieces described here are going to readily interact with each other.

The post should take about 5-10 minutes to read. As such, it goes deep for only a single topic, a single test, a single benchmark, a single observation. The evidence and discussion here might be anecdotal, not actually reviewed for errors, consistency, writing 'tyle, syntaxtic and semantically errors, duplicates, or also consistency. Use and/or trust this at your own risk.

Aleksey Shipilëv, JVM/Performance Geek

Shout out at Twitter: @shipilev; Questions, comments, suggestions: aleksey@shipilev.net

Questions

-

Are there alignment constraints for Java objects?

-

I have heard Java objects are 8-byte aligned, is that true?

-

Can we fiddle with alignment to improve our compressed references story?

Theory

Many hardware implementations require the accesses to data to be aligned, that is make sure that all accesses of N byte width are done on addresses that are integer multiples of N. Even when this is not specifically required for the plain accesses to data, special operations (notably, atomic operations), usually have alignment constraints too.

For example, x86 is generally receptive to misaligned reads and writes, and misaligned CAS that spans two cache lines at once still works, but it tanks the throughput performance. Other architectures would just plainly refuse to do such atomic operation, yielding a SIGBUS or another hardware exception. x86 also does not guarantee access atomicity for values that span multiple cache lines, which is a possibility when access is misaligned. Java specification, on the other hand, requires access atomicity for most types, and definitely for all volatile accesses.

So, if we have the long field in Java object, and it takes 8 bytes in memory, we have to make sure it is aligned by 8 bytes for performance reasons. Or even for correctness reasons, if that field is volatile. In a simple approach,[1] two things need to happen for this to hold true: the field offset inside the object should be aligned by 8 bytes, and the object itself should be aligned by 8 bytes. That is indeed what we shall see if we peek into java.lang.Long instance:[2]

$ java -jar jol-cli.jar internals java.lang.Long

# Running 64-bit HotSpot VM.

# Using compressed oop with 3-bit shift.

# Using compressed klass with 3-bit shift.

# Objects are 8 bytes aligned.

# Field sizes by type: 4, 1, 1, 2, 2, 4, 4, 8, 8 [bytes]

# Array element sizes: 4, 1, 1, 2, 2, 4, 4, 8, 8 [bytes]

java.lang.Long object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 01 00 00 00

4 4 (object header) 00 00 00 00

8 4 (object header) ce 21 00 f8

12 4 (alignment/padding gap)

16 8 long Long.value 0

Instance size: 24 bytes

Space losses: 4 bytes internal + 0 bytes external = 4 bytes totalHere, the value field itself is at offset 16 (it is multiple of 8), and the object is aligned by 8.

Even if there are no fields that require special treatment, there are still object headers that also need to be accessed atomically. It is technically possible to align the majority of Java objects by 4 bytes, rather by 8 bytes, however the runtime work required to pull that off is quite immense.

So, in Hotspot, the minimum object alignment is 8 bytes. Can it be larger, though? Sure it can, there is the VM option for that: -XX:ObjectAlignmentInBytes. And it comes with two consequences, one negative and one positive.

Instance Sizes Get Large

Of course, once the alignment gets larger, it means that the average space wasted per-object would also increase. See, for example, the object alignment increased to 16 and 128 bytes:

$ java -XX:ObjectAlignmentInBytes=16 -jar jol-cli.jar internals java.lang.Long

java.lang.Long object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 01 00 00 00

4 4 (object header) 00 00 00 00

8 4 (object header) c8 10 01 00

12 4 (alignment/padding gap)

16 8 long Long.value 0

24 8 (loss due to the next object alignment)

Instance size: 32 bytes

Space losses: 4 bytes internal + 8 bytes external = 12 bytes total$ java -XX:ObjectAlignmentInBytes=128 -jar jol-cli.jar internals java.lang.Long

java.lang.Long object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 01 00 00 00

4 4 (object header) 00 00 00 00

8 4 (object header) a8 24 01 00

12 4 (alignment/padding gap)

16 8 long Long.value 0

24 104 (loss due to the next object alignment)

Instance size: 128 bytes

Space losses: 4 bytes internal + 104 bytes external = 108 bytes totalHell, 128 bytes per instance that only has 8 bytes of useful data seems excessive. Why would anyone do that?

Compressed References Threshold Get Shifted

(pun intended)

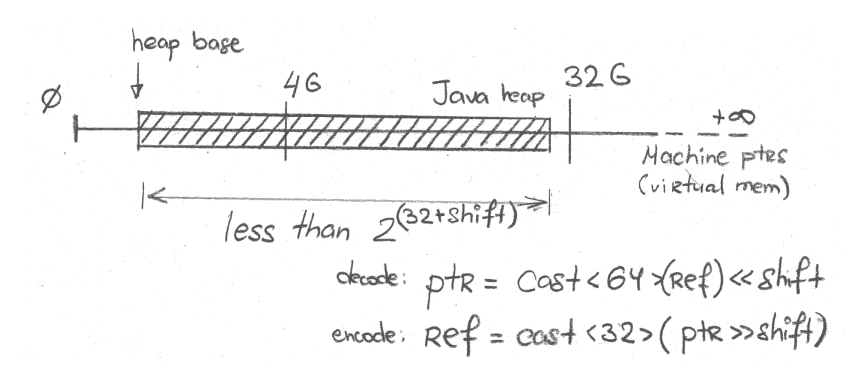

Remember this picture from the "Compressed References" quark?

It says that we can have compressed references enabled on heaps larger than 4 GB by shifting the reference by a few bits. The length of that shift depends on how many lower bits in reference are zero. That is, how objects are aligned! With 8-byte alignment by default, 3 lower bits are zero, we shift by 3, and we get 2(32+3) bytes = 32 GB addressable with compressed references. And with 16-byte alignment, we have 2(32+4) bytes = 64 GB heap with compressed references!

Experiment

So, object alignment blows up instance sizes, which increases heap occupancy, but allows compressed references on larger heaps, which decreases heap occupancy! Do these things cancel each other? Depends on the structure of the heap. We could use the same test we had before, but let’s automate it a little.

Make the little test that tries to identify the minimum heap to accommodate the given number of objects, like this:

import java.io.*;

import java.util.*;

public class CompressedOopsAllocate {

static final int MIN_HEAP = 0 * 1024;

static final int MAX_HEAP = 100 * 1024;

static final int HEAP_INCREMENT = 128;

static Object[] arr;

public static void main(String... args) throws Exception {

if (args.length >= 1) {

int size = Integer.parseInt(args[0]);

arr = new Object[size];

IntStream.range(0, size).parallel().forEach(x -> arr[x] = new byte[(x % 20) + 1]);

return;

}

String[] opts = new String[]{

"",

"-XX:-UseCompressedOops",

"-XX:ObjectAlignmentInBytes=16",

"-XX:ObjectAlignmentInBytes=32",

"-XX:ObjectAlignmentInBytes=64",

};

int[] lastPasses = new int[opts.length];

int[] passes = new int[opts.length];

Arrays.fill(lastPasses, MIN_HEAP);

for (int size = 0; size < 3000; size += 30) {

for (int o = 0; o < opts.length; o++) {

passes[o] = 0;

for (int heap = lastPasses[o]; heap < MAX_HEAP; heap += HEAP_INCREMENT) {

if (tryWith(size * 1000 * 1000, heap, opts[o])) {

passes[o] = heap;

lastPasses[o] = heap;

break;

}

}

}

System.out.println(size + ", " + Arrays.toString(passes).replaceAll("[\\[\\]]",""));

}

}

private static boolean tryWith(int size, int heap, String... opts) throws Exception {

List<String> command = new ArrayList<>();

command.add("java");

command.add("-XX:+UnlockExperimentalVMOptions");

command.add("-XX:+UseEpsilonGC");

command.add("-XX:+UseTransparentHugePages"); // faster this way

command.add("-XX:+AlwaysPreTouch"); // even faster this way

command.add("-Xmx" + heap + "m");

Arrays.stream(opts).filter(x -> !x.isEmpty()).forEach(command::add);

command.add(CompressedOopsAllocate.class.getName());

command.add(Integer.toString(size));

Process p = new ProcessBuilder().command(command).start();

return p.waitFor() == 0;

}

}Running this test on large machine that can go up to 100+ GB heap, would yield predictable results. Let us start with average object sizes to set the narrative. Note these are average object sizes in that particular test, that allocates lots of small byte[] arrays. Here:

Not surprisingly, increasing alignment does inflate the average object sizes: 16-byte and 32-byte alignments have managed to increase the object size "just a little", while 64-byte alignment exploded the average considerably. Note that object alignment basically tells the minimum object size, and once that minimum goes up, average also goes up.

As we seen in "Compressed References" quark, compressed references would normally fail around 32 GB. But notice that higher alignments prolong this, and the higher the alignment, the longer it takes to fail. For example, 16-byte alignment would have 4-bit shift in compressed references, and fail around 64 GB. 32-byte alignment would have 5-bit shift and fail around 128 GB.[3] In this particular test, on some object counts, the object size inflation due to higher alignment is balanced by lower footprint due to compressed references are active. Of course, when compressed references get finally disabled, the alignment costs catch up.

It can be more aptly seen at "minimal heap size" graph:

Here, we clearly see the 32 GB and 64 GB failure thresholds. Notice how 16-byte and 32-byte alignment took less heap in some configurations, piggybacking on more efficient reference encoding. That improvement is not universal: when 8-byte alignment is enough or when compressed references fail, higher alignments waste memory.

Conclusions

Object alignment is a funny thing to tinker with. While it inflates the object sizes considerably, it can also make the overall footprint lower, once compressed references come into picture. Sometimes it makes sense to bump the alignment a little bit, to reap the footprint benefits [sic!]. In many cases, however, this would degrade overall footprint. Careful study on the given application and given dataset is required to figure out if bumping alignment pays off or not. It is a sharp tool, use it with care.